Training ML models directly from GitHub with cnvrg.io MLOps

In this post, I’ll show you how you can train machine learning models directly from GitHub. I originally presented this workshop at GitHub Satelite 2020 which you can now view the recording.

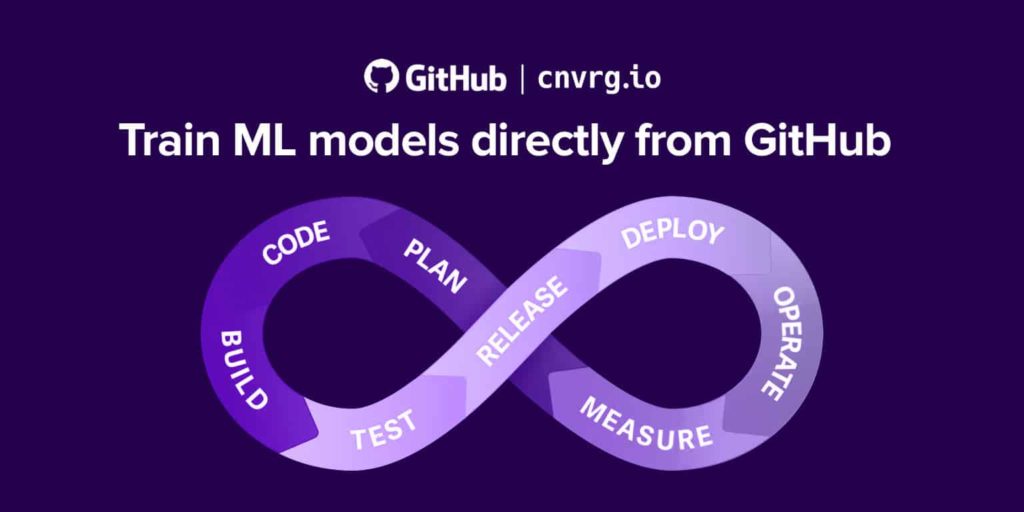

We all know software CI/CD. You code, you build, you test, you release. With machine learning it’s similar, but also quite different. You start with data, where you will do some sort of data validation, data processing and data analysis. You then train the model on a remote GPU or a specific cluster that you have. You run model validation, you deploy the model, and you make predictions. Once your model is deployed to production you monitor the predictions. If the model shows signs of performance drift, you might even retrain the model based on that. What we’re suggesting in this post is a simple way to train models directly from GitHub. In other words, it’s a GitHub plus MLOps solution.

What is MLOps?

MLOps (a compound of “machine learning” and “operations”) is a practice for collaboration and communication between data scientists and operations professionals to help manage the production machine learning lifecycle. Similar to the DevOps term in the software development world, MLOps looks to increase automation and improve the quality of production ML while also focusing on business and regulatory requirements. MLOps applies to the entire ML lifecycle – from integrating with model generation (software development lifecycle, continuous integration/continuous delivery), orchestration, and deployment, to health, diagnostics, governance, and business metrics.

How to train your ML model from GitHub

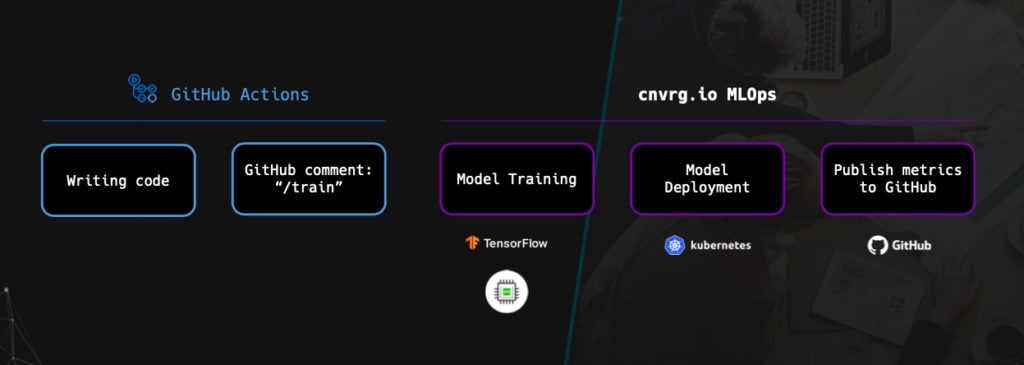

To build this capability of training models directly from GitHub, we used GitHub Actions – a way to automate development workflows, and here’s how it works: Once you’ve written your code, you push it to GitHub to a specific branch. You create a pull request and once commenting “/train” in your PR it will trigger model training with cnvrg. Just like that, the command will automatically provision resources and start the training pipeline.

This model training pipeline is training a TensorFlow model on a remote GPU. It’s doing model deployment on Kubernetes and eventually publishes its metrics back to GitHub.

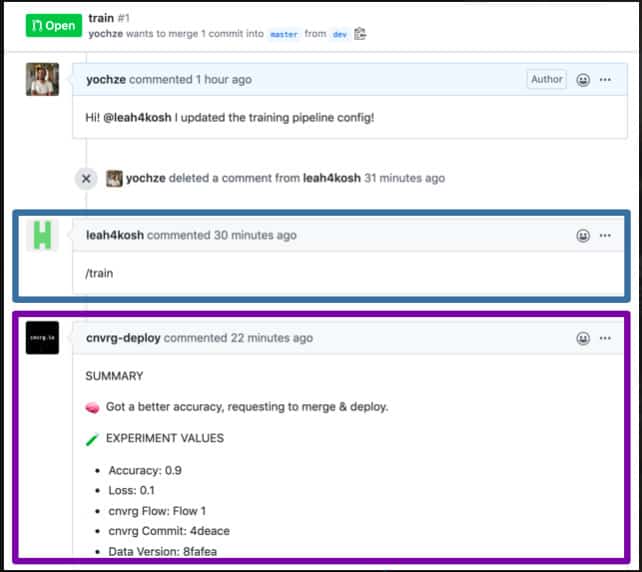

Above is a real example of how this GitHub action that we built works we also created a webinar just for that). So, as you can see, I pushed new code into GitHub. Then leah4kosh, my cofounder and CTO did the /train comment on my pull request, which triggered model training and pushed back the results to this pull request.

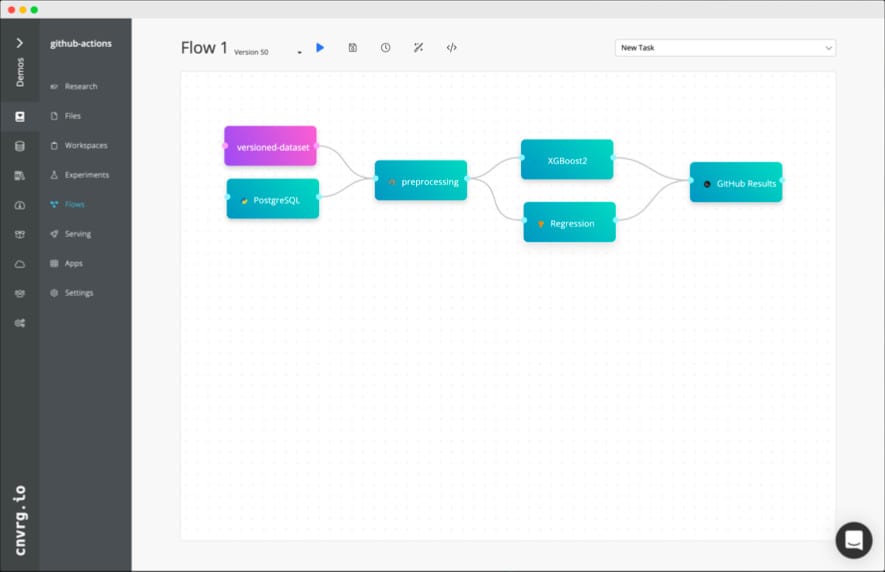

This is what the training pipeline looks like in cnvrg.io. As you can see, at the end of the pipeline it’s pushing the results back to GitHub — during execution, cnvrg tracks all the different models, parameters and metrics and post it back on the same PR the model training was triggered.

To build this GitHub Action we used ChatOps to track and listen to the different comments on the pull request. We use Ruby to install the cnvrg CLI and then we use the cnvrg CLI to train the machine learning pipeline.

And there you have it! This is how you train an ML model directly from GitHub. Get started with cnvrg.io CORE, the free community version of cnvrg.io ML platform: