Machine Learning Operations (MLOps)

Deliver a streamlined ML pipeline, minimizing friction between data science and engineering teams from research to production

The Top Solution for MLOps and Model Management

According to industry reports, more than 80% of machine learning models don’t make it to production. This is often due to the fact that most of a data scientists time is spent on non-data

science tasks, like DevOps, infrastructure configuration, versioning, data management, tracking experiments, visualization, deployments, monitoring performance, managing frameworks and more. The challenge lies in finding professionals that are both knowledgeable in DevOps and engineering best practices, while also understanding the complexity of machine learning in production. The endless DevOps bottlenecks make it difficult for teams to operationalize their machine learning predictions, and deliver real business value for their models.

Many organizations underestimate the amount of technical complexity and effort it takes to deliver machine learning models to production in real world applications. It is not uncommon for organizations to end up spending more on infrastructure development, consume far more resources and spend way more time than anticipated before seeing any real results.

MLOps addresses this challenge and reduces friction and bottlenecks between ML development teams and engineering teams in order to operationalize models. As the name indicates, MLOps combines DevOps practices for the unique needs of machine learning and AI development. It is a discipline that seeks to systematize the entire ML lifecycle. MLOps in the context of enterprises helps teams productionize machine learning models, and helps to automate DevOps tasks, so data scientists can focus less on technical complexity and more on delivering high impact machine learning models.

Standardize and Optimize Machine Learning Workflows

Integrated and Open Machine Learning Stack

- AI ready infrastructure integrates easily with existing data architecture

- Open container-based platform offers flexibility and control to use any Docker image or tool

- Multi-cloud/Hybrid-cloud and on-premise utilization with extensive resource management and cluster orchestration

- Quickly deploy AI frameworks, and libraries to get a head start with pre-trained models or model training scripts, and use domain specific workflows

- Deploy your ML projects on modern production infrastructures with native Kubernetes

- Connect any data source, use any compute and run any framework or AI library

- Choose to interact through Web UI, CLI (Command Line Interface), SDK or REST API

Scalable, Reproducible ML Pipelines from Research to Production

- Full version control and end to end traceability for easily reproducible machine learning models

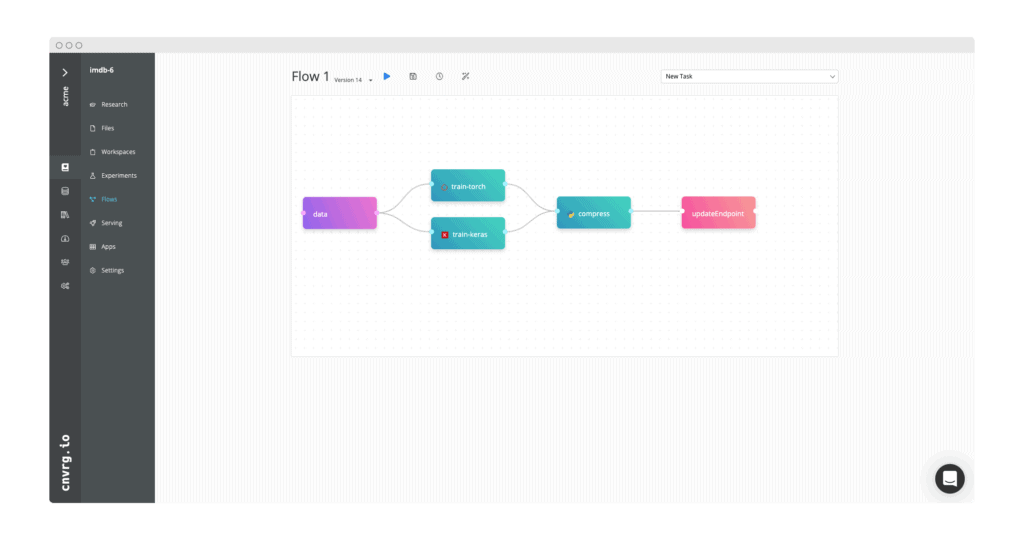

- Customizable production ready drag and drop ML pipelines with custom compute attachments for each ML job

- Quickly run hundreds of experiments in parallel with hyperparameter optimization

- Operationalize your models to production in one click as a web service, streaming endpoint or batch

- Share and reuse prebuilt ML components from the AI library

- Easily auditable for better regulation and governance

- Integrated scheduler & resource manager to automate ML workloads, monitor execution & infrastructure, set up alerts, prioritize jobs, access full logs and analyze results

Production-ready ML Models Built for Performance

- Deliver robust, production ready models with CI/CD integration, and apply advanced retraining triggers with human validation points in case of performance drift

- Monitor model performance with advanced visualizations in Kibana and Grafana

- Publish your dashboard and reports in one click to stakeholders with Voila, Dash, or Shiny

- Automate ML pipeline retraining triggers to optimize model performance with continual learning

- Establish a feedback loop for the data science team to iterate and improve production models while ensuring updated models don’t negatively impact application performance

Extend Resources with Multi-Cloud and Hybrid-Cloud Capabilities

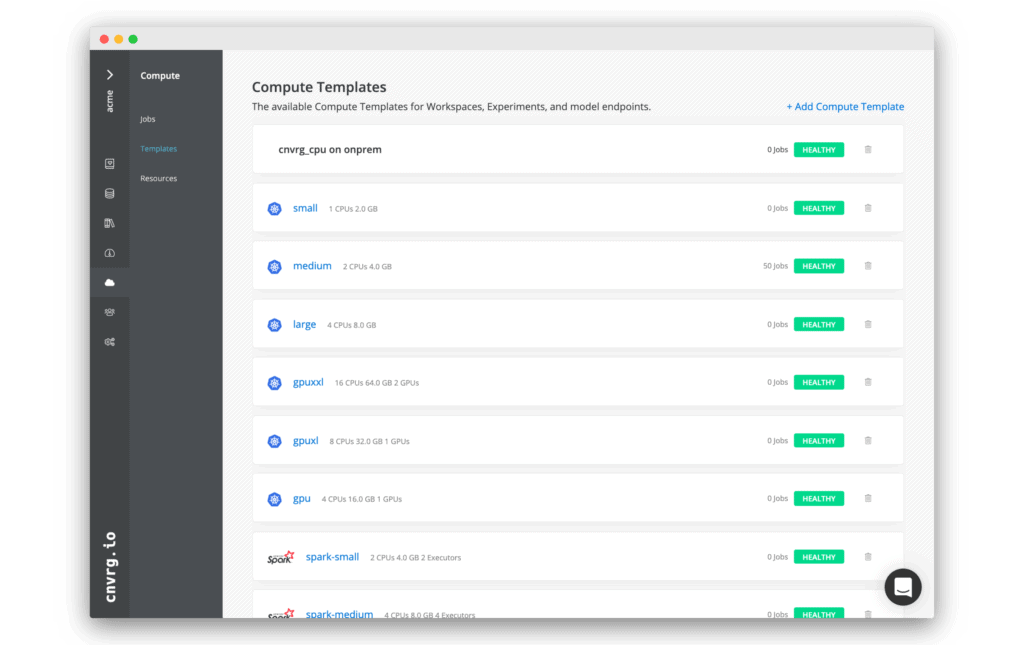

- Manage all ML compute resources in a unified and secure environment with advanced monitoring and administration capabilities built in

- Enable data scientists to rapidly launch ML workloads on remote clusters without tinkering with infrastructure or complicated configuration

- Optimize compute utilization with advanced resource management

- Control compute consumption with advanced administrative policy setting capabilities

- Ability to attach different resources to different ML jobs easily

- Kubernetes based scheduler automates policy-based workflows

Machine learning model lifecycle automation

MLOps covers the whole machine learning (or deep learning) lifecycle from training, to model deployment, monitoring and re-deployment of full ML pipelines. With MLOps teams bridge science and engineering teams in a clear and collaborative machine learning management environment from research to production. cnvrg.io takes care of all the “plumbing” so data scientists and ML engineers can focus on building solutions, gathering insights, and delivering business value in production.

Code-First ML Platform

Build advanced algorithms with ability to use custom code anywhere in the ML pipeline

Version Control

End to end version control of project files, artifacts, datasets and full ML pipeline runs

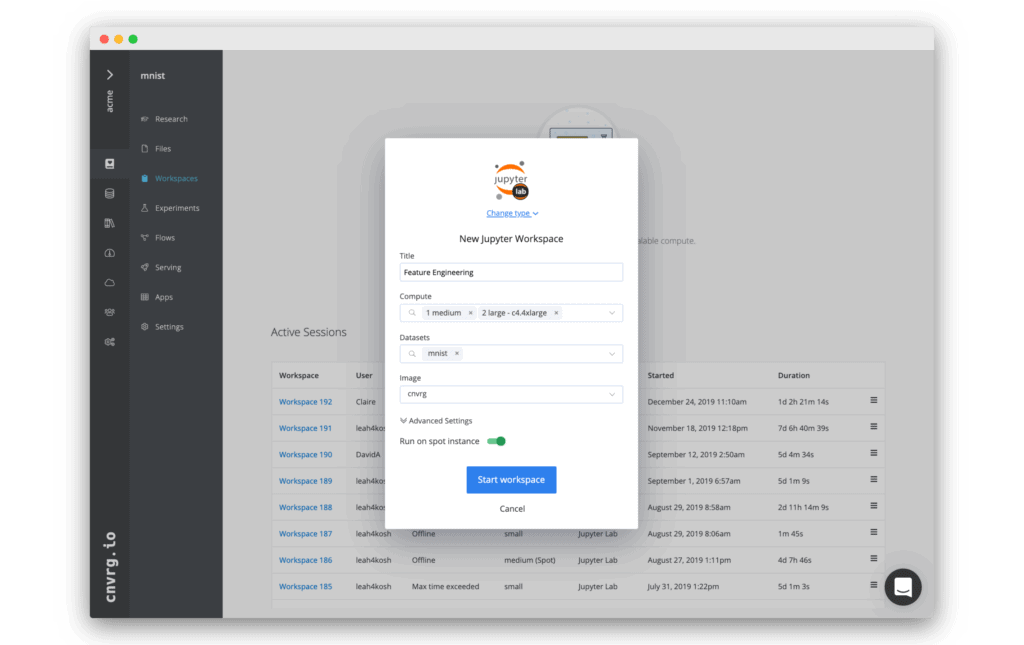

Interactive Workspaces

Built-in support for JupyterLab, JupterLab on Spark, R Studio and Visual Studio Code to run on remote computes

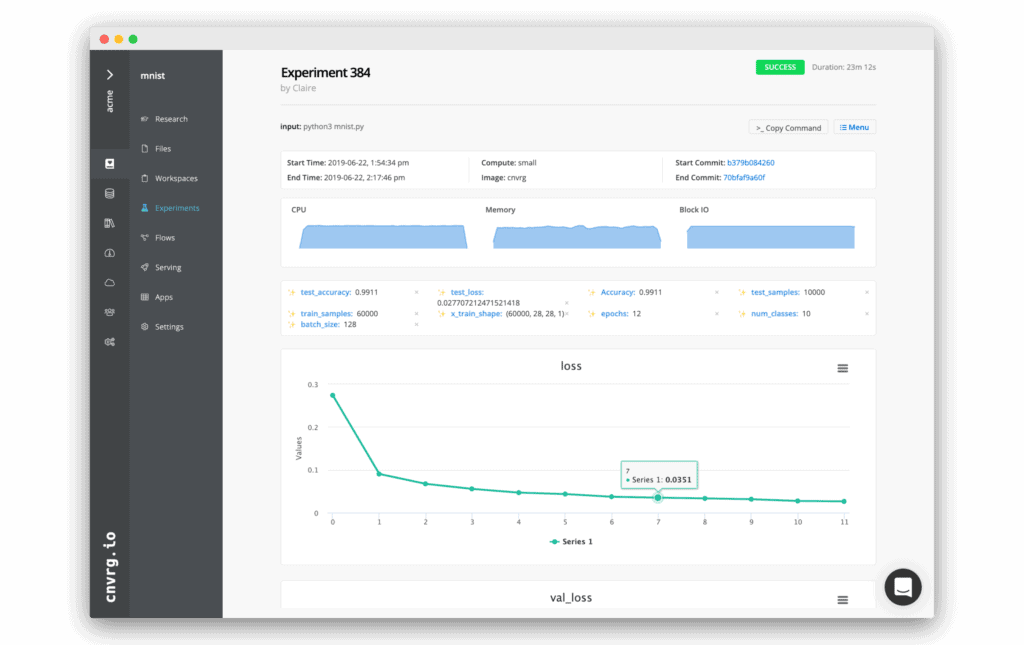

Rapid Experimentation

Run, track and compare multiple experiments in parallel with a single command with grid searches and hyperparameter optimization

ML Pipelines

Production-ready drag and drop ML pipelines to run your ML components quickly and easily

AI Library

Reuse algorithms, pipelines, and ML tasks with a modular code component package manager

Resource Management

Instantly run pre-configured any compute whether Kubernetes, Spark or On premise and monitor with dashboards

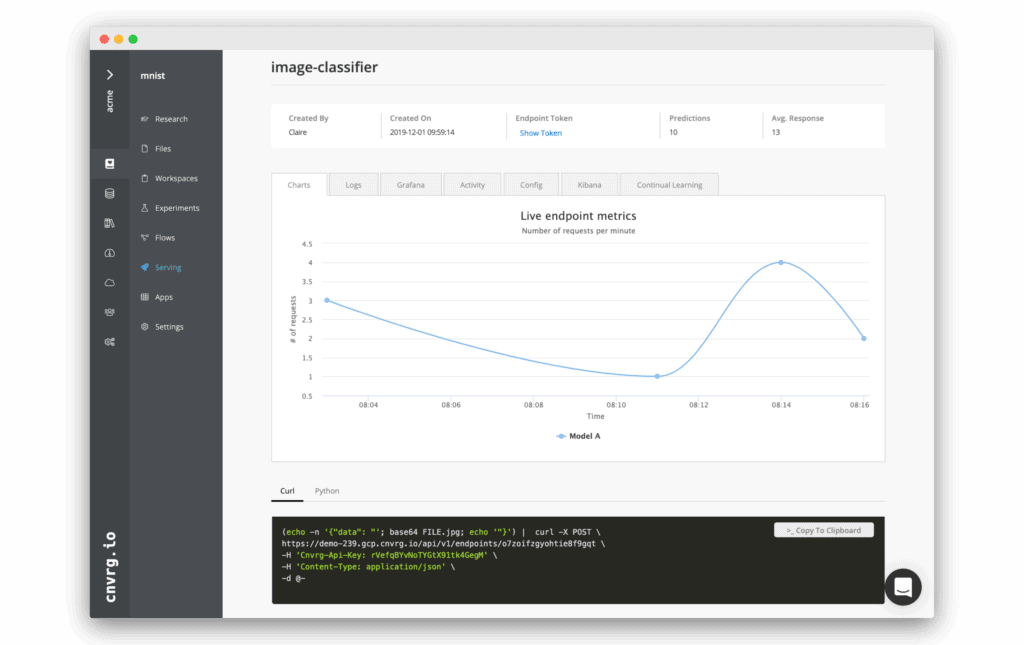

Model Deployment

One click model deployments on Kubernetes via web service, Kafka streams or batch predictions

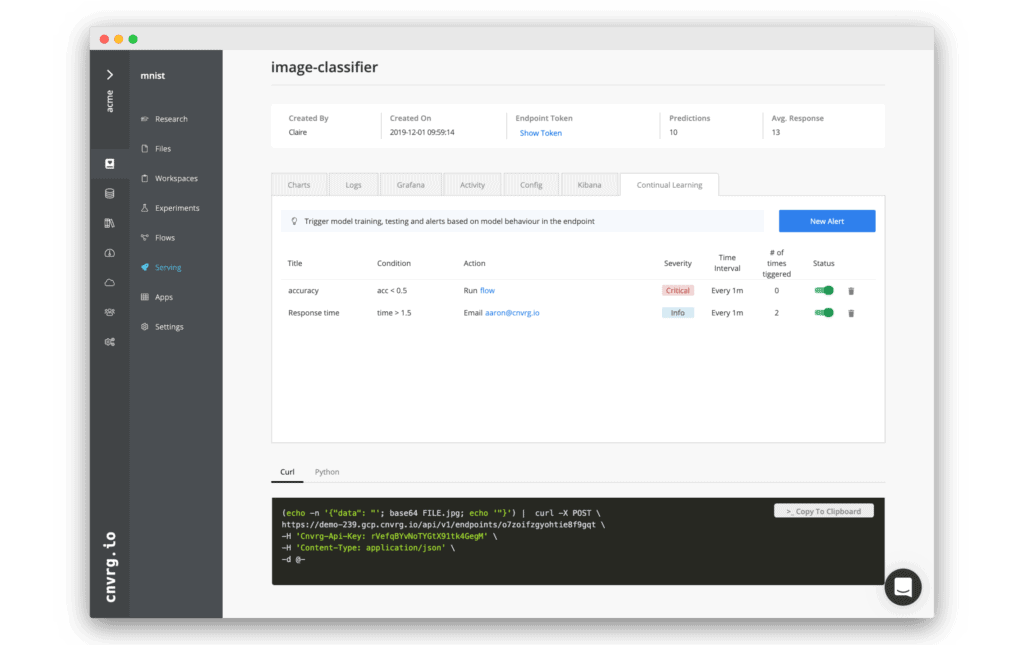

Model Monitoring

Monitor model performance via live charts showing traffic, latency, drift and accuracy

Continual Learning

Create feedback loop with advanced retrain triggers to improve models in production

cnvrg.io Platform Capabilities

cnvrg.io delivers accelerated ML workflows with end to end MLOps. Reduce time spent on DevOps and more on building high impact ML models. cnvrg.io’s container-based infrastructure helps simplify engineering heavy tasks like tracking, monitoring, configuration, compute resource management, serving infrastructure, feature extraction, and model deployment. Teams using cnvrg.io drastically reduce hidden technical debt, allowing data scientists to focus on productivity and increase business value. With cnvrg, companies can:

- Quickly deploy scalable machine learning models written in any language, using any framework in one click, on modern production infrastructures such as Kubernetes on any cloud or on-premise system

- Improve model performance in production with advanced monitoring of data drift, accuracy and latency and set alerts to provide timely updates that don’t waste resources

- Iterate on models with continuous testing and validation on updated data while continuing to serve business applications

- Enforce governance policies related to machine learning models and retrace results, including who is publishing models, why changes are being made, and which models have been deployed over time

- Optimize resource utilization of machine learning workloads, reducing wasted compute

FOR DATA SCIENTISTS

Experiment at scale

Experiment at scale

Spend more time on data science and less time on technical complexity so you can deliver more high impact models

- Reproducible ML Pipelines

- Automatic End to End Version Control

- Tool, Language and Framework Agnostic

FOR DATA SCIENCE LEADERS

Deliver more value for less

Streamline the ML workflow and increase your team’s productivity, so they can deliver high impact and peak performing models that drive value

- Production-ready Pipelines

- Enhanced Governance

- AI Ready Infrastructure

FOR ML ENGINEERS

Accelerate time to production

Deliver more models to production with a scalable and secure AI ready infrastructure out of the box

- Simple Cluster Orchestration

- Canary Deployment and CI/CD Capabilities

- One Click Model Deployments

Streamline Collaboration Between Data Science and IT/Ops

Running ML systems in production is a complex challenge that requires knowledge of machine learning, software engineering, and DevOps to be successful. Without MLOps to streamline the ML workflow, most of a data scientist’s time is consumed with the aspects of delivering machine learning solutions involving infrastructure, feature engineering, deployment, and monitoring. Then once in production, ML models often fail to adapt to changes in the environment and new data which hurts performance results.

To achieve a streamlined process, companies must reduce friction wherever possible throughout the ML pipeline. MLOps helps teams deploy faster, easier and more often, centralizes model tracking, versioning and monitoring, unifies technologies, and enhances collaboration. Not only that, but MLOps supports model improvement with continual learning and an established CI/CD (continuous integration/continuous delivery) suited for ML systems.

Why cnvrg.io is different?

Automated Model Containerization

Open container-based platform offers flexibility and control to use any image or tool

Scalability

cnvrg.io grows with your organization, to help you deliver more models, easily adopt more compute and the industries latest tools and frameworks

Hybrid Cloud and On Premise

Whether your infrastructure is designed for on-prem, multi-cloud, or both, cnvrg.io works across AWS, Azure, Google Cloud, as well as on-premise options

Open & Flexible

Quickly unify all your teams favorite ML tools, frameworks and resources with no vendor lock-in

Model Reproducibility

Easily reproduce results with fully version ML pipelines, datasets and a library of reusable ML components

Resource Management

cnvrg.io provides extensive tools for resource management to help your IT team utilize and control all compute resources

MLOPs Resources

MLOPs Examples With cnvrg.io

- S3

- GitHub

- NVIDIA GPUs

- Python

Train and deploy using NVIDIA deep-learning containers

Load data from S3 object storage, train with both TensorFlow and PyTorch deep-learning containers on NVIDIA GPUs, pick champion model and deploy to a production endpoint.

MLOps - Case Studies

MLOps - Webinars

MLOps - Posts

MLOps - FAQ

Machine Learning Operations – commonly abbreviated as MLOps – is a methodological framework for collaboration and communication between data scientists and operations personnel. The purpose is to manage the production of the ML lifecycle, ensuring efficient, smooth collaboration between data science and engineering, removing obstacles to ensure swift delivery into production. It is often considered agile for data science workflows and aids in establishing a cleaner communication channel.

MLOps has quickly risen to become the standard practice for enterprise machine learning development, helping teams manage the entire ML lifecycle from research to production. Data scientists and engineering professionals often operate in silos, making it challenging for teams to work collaboratively to achieve overall organization-wide goals. MLOps aims to streamline the development, deployment, and operationalization of ML models. It supports the build, test, release, monitoring, performance tracking, reuse, maintenance and governance of ML models. In short, MLOps is the connecting tissue between the data science team and DevOps or engineering units, ensuring it all runs without friction throughout your ML pipeline.

A major focus of cnvrg.io is on how to get more models to production and keep them running at optimized performance. We’ve made deployment of any ML application simple. In one click, any model can be deployed as scalable REST APIs based on Kubernetes. Once published, data scientists and engineers have complete control over their model in production with an in-depth visual dashboard to monitor all parameters. Users have the ability to set alerts for underperforming models, and can set up automatic retraining triggers called continual learning to ensure your models remain at peak performance while in production – with zero downtime.

Applying ML to real world applications is unlike deploying traditional software. Not all data scientists come from a DevOps background and are not always following best practices for deploying and managing machine learning applications. ML can impact society in many ways that software applications don’t. Without MLOps enterprise ML development is slow & hard to standardize and scale. Also, there are many tools & frameworks that are scattered and disconnected which makes it very difficult to standardize.

Oftentimes, companies spend most of their efforts on building their models, and ensuring they are perfect. But, building the model is only half the work. Maintaining a model while it is in production can have a few challenges on its own. For one, it’s rare to have engineers that are trained in the unique requirements of managing a model in production. It is quite different than deploying a typical application. For one, these models need to be prepared to handle a burst in inputs at any time, and have the correct compute available and automated to fluctuate based on demand. Another major challenge is monitoring specific parameters in real time. Models also take a lot of tweaking and maintenance while in production. Models are not stagnant, they should continue to learn while in production and stay up to date with the current data. The models need to be constantly updated with current data to maintain accuracy, and to ensure performance is as up to date as possible, otherwise it can have serious implications for companies and societal implications. Luckily, MLOps combats most of these challenges and reduces friction.

Contact us to discuss your project: our team of ML specialists will be happy to discuss a solution for your use case and needs

- In the same way that DevOps frees developers from infrastructure issues, allowing them to concentrate on application development, MLOps helps create the simple and easy-to-use research environment that is necessary to allow data scientists to focus on model development rather than infrastructure configuration and monitoring. But, the infrastructures are quite different for serving machine learning applications as opposed to regular software. Unlike software, machine learning requires a lot of experimentation that needs to be traceable and reproducible with every iteration. This makes tracking systems much more important for MLOps. Machine learning and deep learning is also heavily impacted by changing data which can cause data drift that can negatively affect the model in production. ML models need to constantly be updated in order to stay accurate, which is much different from a software that works well. Instead of software CI/CD, MLOps requires continual learning, or continuous retraining and serving.

Getting started with the right infrastructure is key to accelerated growth of a machine learning team. We’ve worked with endless organizations that have wasted years building an in-house infrastructure to support their data science team. Our advice – don’t reinvent the wheel. What these organizations failed to realize is that they are building for a moving target. You never know when your team or AI production needs to scale. Teams that are trying to build solutions with high business impact should focus on that, not on trying to stay up to date with the most recent AI technology. That is what companies like cnvrg.io are for. Data science platforms like ours provide all the latest MLOps tools and infrastructure to actually accelerate your machine learning development so you can focus on impact, and attaining company buy-in with your high performing models.

cnvrg.io is a tool by data scientists, for data scientists. We’ve worked with data science teams across industries to provide everything a data scientist needs to build high impact ML solutions. As data scientists we understand that practitioners value flexibility to use any language, framework or compute whether on premise or cloud. cnvrg.io blends in easily to existing IT infrastructure, and ensures users never need to be vendor-locked so they can utilize the most economical multi-cloud or hybrid-cloud options for their specific use case. Our unified code-first workbench takes care of all the “plumbing” so data scientists and ML engineers can focus on

building solutions, gathering insights, and delivering business value.