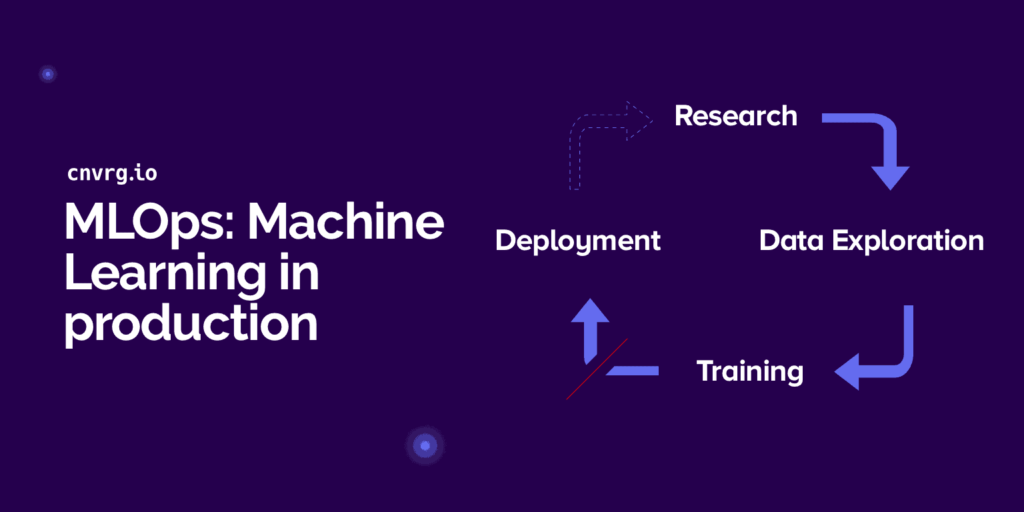

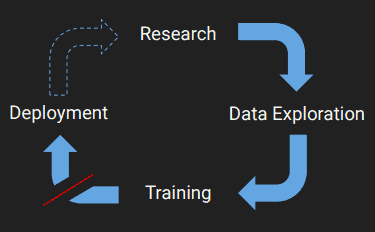

What is MLOps?

Machine Learning Operations – commonly abbreviated as MLOps – is a methodological framework for collaboration and communication between data scientists and operations personnel. The purpose is to manage the production of the ML lifecycle, ensuring efficient, smooth collaboration between data science and engineering, removing obstacles to ensure swift delivery into production. It is often considered agile for data science workflows and aids in establishing a cleaner communication channel.

MLOps has quickly risen to become the standard practice for enterprise machine learning development, helping teams manage the entire ML lifecycle from research to production. Data scientists and engineering professionals often operate in silos, making it challenging for teams to work collaboratively to achieve overall organization-wide goals. MLOps aims to streamline the development, deployment, and operationalization of ML models. It supports the build, test, release, monitoring, performance tracking, reuse, maintenance and governance of ML models. In short, MLOps is the connecting tissue between the data science team and DevOps or engineering units, ensuring it all runs without friction throughout your ML pipeline.

MLOps for Standardization

A lack of standardized processes between the data science teams and the DevOp teams causes friction points and results in wasted time on both ends. This often leads to a slower, less efficient ML pipeline. There are many technical delays in the process, and it proves difficult to iterate on models. The variety of tools, languages, resources, and frameworks used in machine learning pipelines create a messy work environment, which may not be up to par with enterprise-level production standards. MLOps seeks to standardize the process and unify all the different tools and frameworks utilized by data scientists. MLOps will centralize your model tracking, versioning and monitoring, integrate different technologies and languages together, and strive to standardize the workflow.

Automated Model Tracking with MLOps

Model management can be difficult without an organizational framework to consolidate it. MLOps allows you to manage your data sets, version your codes, support queries, manage your models, and efficiently route data to different experiments so that you could link them up quickly. This makes the data side more orderly, manageable, and eventually reproducible. MLOps can be utilized to track your entire end-to-end workflow allowing a reproducible and traceable workflow.

Best practices for machine learning operations:

The reality today is that not all data scientists come from a DevOps background and are not

always following best practices. In addition, not all DevOps are knowledgeable in machine learning which causes endless friction. The main challenges for enterprise ML development is that it is slow & hard to standardize and scale, and that there are currently many tools & frameworks, it is hard to know which are the best ones to use.

Reproducibility – having references to code in earlier and present releases. As well as version control of the data that was utilized in order to train the model. With advanced end-to-end tracking mechanisms, you’ll be able to quickly reproduce results and attend to overall governance requirements.

Traceability – keeping references to each and every data engineering script that was utilized in order to analyze, enhance and convert data for model consumption. Maintain a git-based method to commit and track the changes to help engineers quickly deploy models to production.

Governance – delivering references to the outputs and logs connected to model bias or drift. Ensuring your data is unbiased, and you have the proper KPIs and alerts to measure bias or underperformance. For more information on how to monitor your machine learning for bias, you can watch our webinar here

Integrability – recording the metadata in every step concerning the operationalization phase. Allow flexible exploration with integrations that promote any language, framework and resource scalability and orchestration with Kubernetes.

Resource Management with MLOps

Managing different compute can pose a problem when trying to run a quick job. Waiting for DevOps to spin up a CPU might not always be an option when trying to run an experiment or tweak a model. MLOps can optimize resource management, by connecting the resources you have available to your project. Connecting your resources can help you utilize available compute, and even automatically scale based on demand. These are all automated services that MLOps can provide for you whether you’re on-premise, cloud-based or both. At cnvrg.io we are able to provide up to 80% savings on cloud costs with spot instances that automatically rerun on an available instance, so you don’t even have to deal with downtime. MLOps can maximize your resources, and take care of all resource management activities that require time spent by operations teams. With automated resource dashboards, operations teams are able to oversee all compute activity, offering a constant communication tool between data science teams and engineering teams.

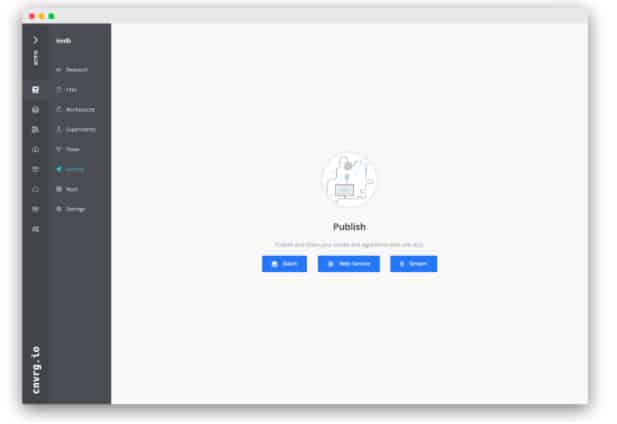

Model Deployment with MLOps

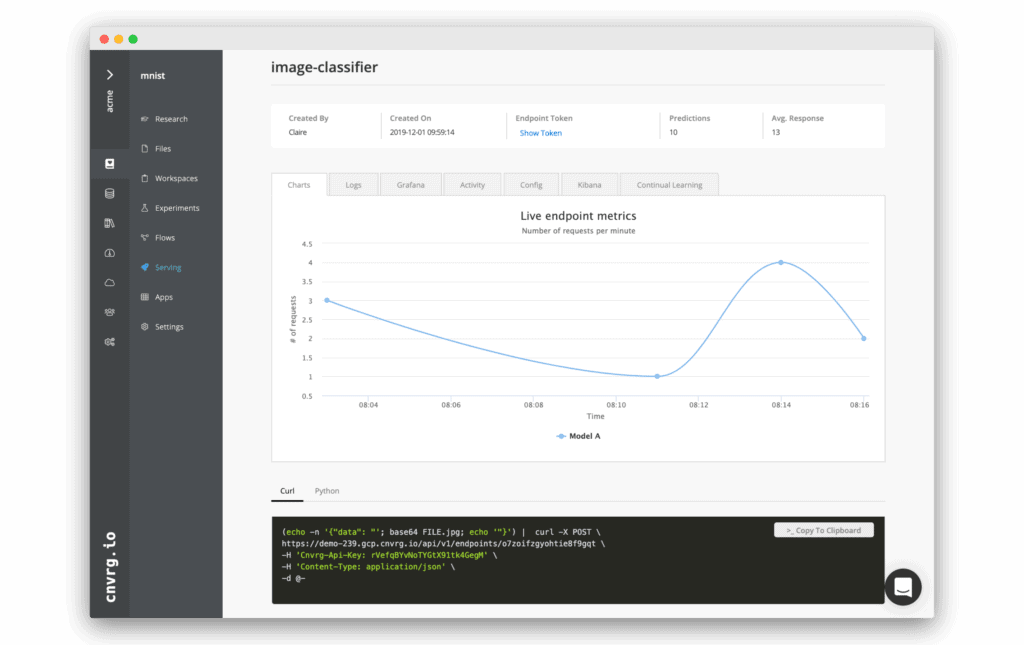

60% of models developed never make it into production. That is an alarming statistic considering how much time, resources and money enterprises invest in machine learning development. Deployment can be simplified with MLOps solutions. Instant access to Docker containers can help bring machine learning models into production faster with consistent packaging and automated code pipeline. Utilizing Kubernetes can simplify the deployment, scaling and availability of AI workloads across multiple environments. cnvrg.io creates a synchronized machine learning workflow that allows you to train machine learning models built in different frameworks in a distributed manner, without the need for manual configuration of training infrastructure. In addition, you can intelligently automate deployment with canary deployment features and with CI/CD pipelines for a smooth deployment.

While machine learning pipelines can be challenging, they can deliver bountiful rewards to enterprise organizations. MLOps is the common thread pulling the entire operation together, bridging the gap between the engineers and data scientists, and greasing the wheels to make the entire process streamlined, smooth, and delivering steady, predictable, high-quality results in production. With a fully robust end-to-end platform, cnvrg.io has your MLOps covered, making the execution of your data science projects easier than ever.

Want to see more? Watch our MLOps webinar.