Launch any machine learning or deep learning framework in one click

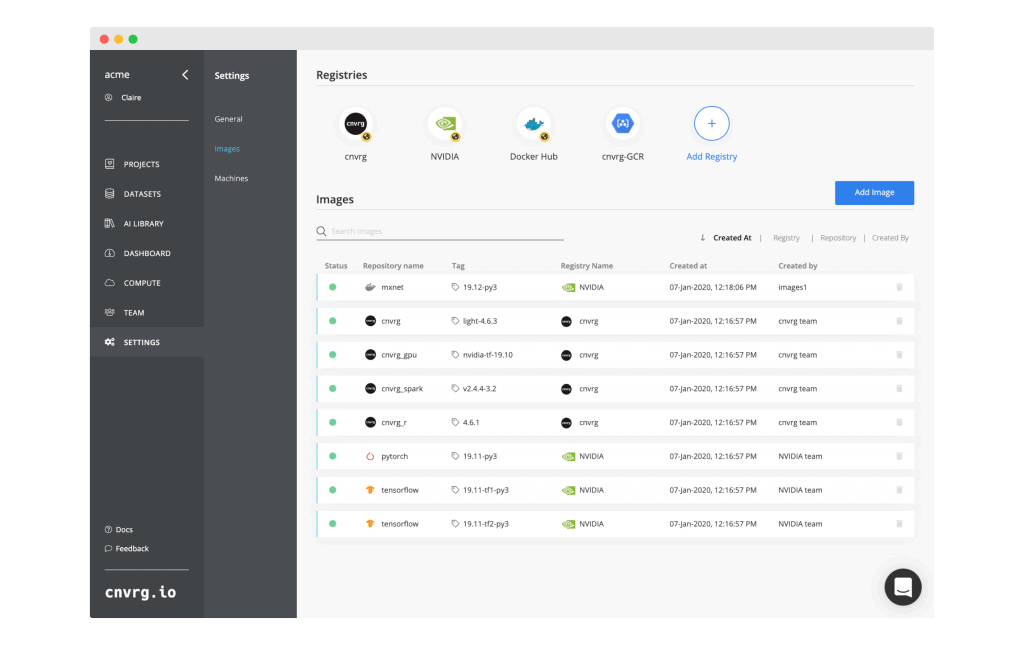

Many data scientists require DevOps or MLOps engineers to build custom images, install and connect compute which could take hours or days to configure and deploy. Now it can all be done in one click. We’re happy to announce extreme automation enabled by cnvrg.io and NVIDIA NGC container integration.

cnvrg.io users now have instant access to NVIDIA NGC’s fully managed registry of optimized containers designed to run any framework you need. These containers can run on any compute resource whether on premise or cloud accelerating time from research to production. The NGC Registry empowers data scientists to utilize all the tools they need on any compute resource in one easy step, launching any ML or DL framework in minutes. In this post we’ll tell you everything you need to know about the NGC integration and how it can help your team drastically improve MLOps.

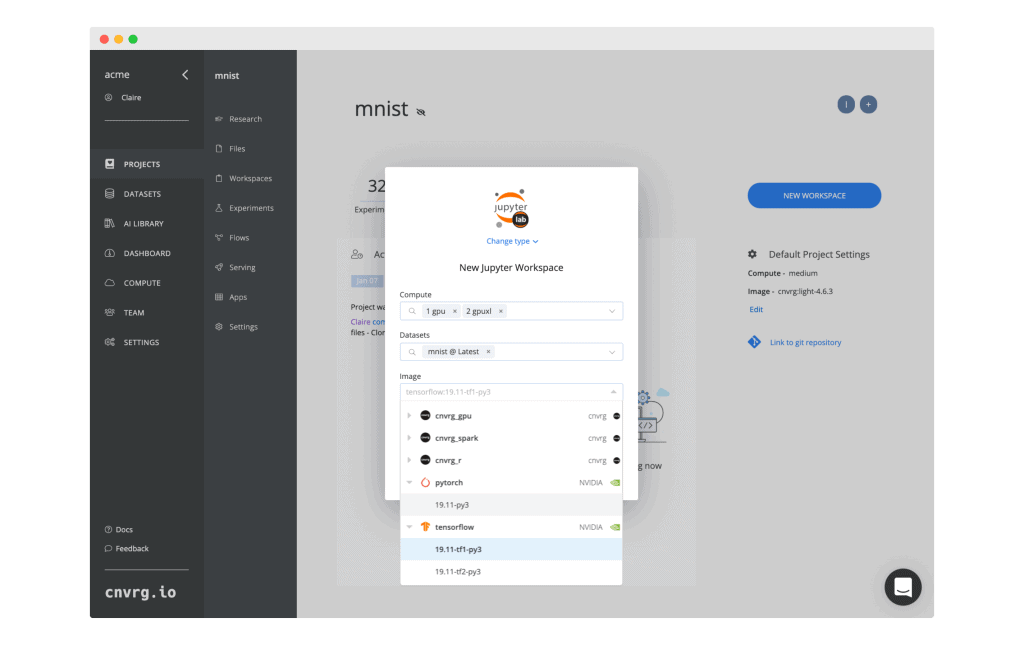

Spin up any ML or DL framework in one click

The new NGC integration lets you deploy AI frameworks and get a head start with pre-trained models or model training scripts. It uses domain-specific workflows and Helm charts for the fastest AI implementations. cnvrg.io takes care of all the infrastructure “plumbing” so data scientists and ML engineers can focus on building solutions, gathering insights, and delivering business value.

Save time with NGC’s ready-to-run containers

cnvrg.io users can gain instant access to NGC’s fully managed registry of optimized containers built to run any framework you need, and on any compute resource whether on premise or off premise. In one click you can run TensorFlow, PyTorch, Keras, CUDA Toolkit, Caffe2, MXNet, RAPIDS, and Torch. Containers are delivered ready-to-run, including all necessary dependencies such as CUDA runtime, NVIDIA libraries, and are GPU-optimized for better development and speed.

Extend resources with multi-cloud and hybrid-cloud capabilities

cnvrg.io helps you utilize all of your compute resources with a flexible multi-cloud and hybrid-cloud infrastructure. The new integration is built to run any NGC container on any compute (cloud and/or on-premise). cnvrg.io is a Kubernetes native solution with advanced resource management that enables enterprises to expand resources and save on cloud costs. IT admin teams can view pipeline performance and KPI metrics with the automated compute dashboard.

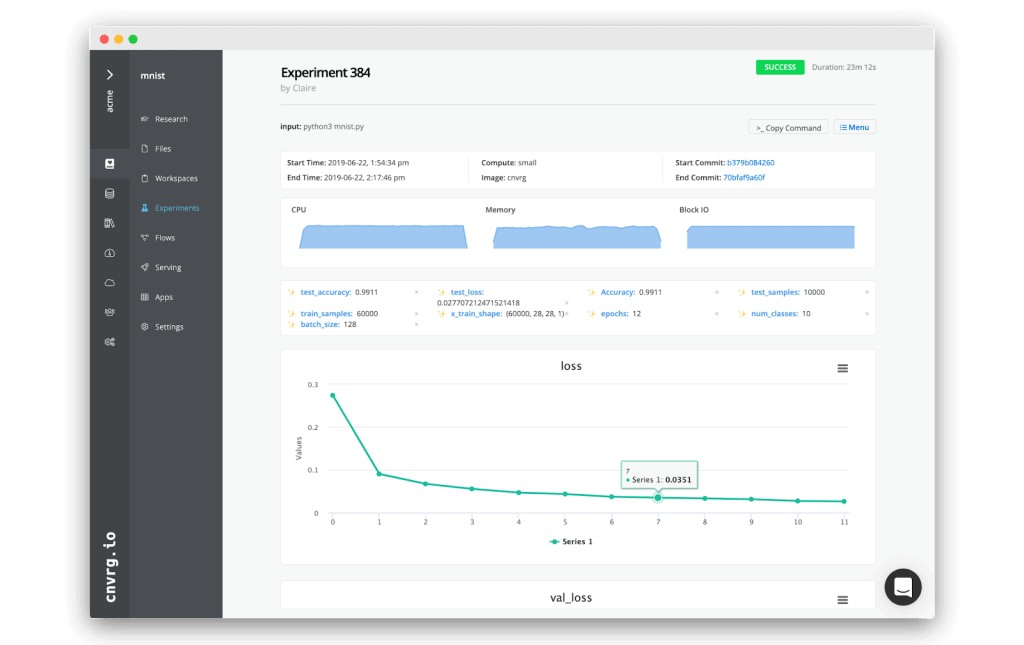

Instantly reproduce any ML model

The fully trackable, end-to-end monitoring system enables teams to reproduce results. cnvrg.io provides a unified hub for all your ML tracking Machine Learning Tracking metadata to easily rebuild models and quickly transition from development into production.

Together with NVIDIA NGC, cnvrg.io is able to give teams a unified hub to standardize their data science and streamline the machine learning workflow. cnvrg.io NGC integration is a breakthrough in MLOps solutions by eliminating computationally heavy tasks with built-in pre-configured images that can run AI frameworks instantly – no dependencies required.