Machine Learning (ML) and Artificial Intelligence (AI) are spreading across various industries. With the rapid increase of volume as well as the complexity of data, ML and AI are widely recommended for analysis and processing. The use of machine learning and artificial intelligence across industries demands more internal research which in turn means more money and time spent. But what if we could use one solution to solve multiple problems? What if we could have a single algorithm to tackle more than one issue?

In this post, we are going to demonstrate how we managed to use a well-known machine learning model to solve two completely different problems – speech recognition and image classification. In order to do this, we first need to define what an AI library (or ML library) is.

AI library is a script/pipeline/algorithm of a machine learning model which we would like to reuse. In order to reuse the machine learning component, we make the code as general as possible so we can simply plug any data (that fits some requirements of the algorithm) as an input and to receive the trained model as an output.

Cnvrg.io’s AI library

In order to solve speech recognition and image classification problems we would use a simple multiclass convolutional neural network model. This type of model is commonly used for computer vision, and for image classification in particular. But how would we use the same approach for both?

Datasets

For the speech recognition we would use –

https://www.kaggle.com/jbuchner/synthetic-speech-commands-dataset

This dataset includes 30 classes of .wav files where each class includes single class.

For the image classification we can use any dataset which includes some classes (2+) of images, even MNIST, CIFAR10, etc.

The Model

As mentioned earlier, we would use a convolutional neural network. The network will be composed of 5 layers:

1) Input layer with image_width X image_height neurons.

2) Conv2D layer with 32 neurons and MaxPooling.

3) Conv2D layer with 32 neurons and MaxPooling.

4) Dense layer with 64 neurons and 50% dropout.

5) Output layer

Conv_wind, pool_wind = (3, 3), (2, 2)

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Conv2D(32, conv_wind, input_shape=(img_width, img_height, 3)))

model.add(tf.keras.layers.Activation(activation_func_hidden_layers))

model.add(tf.keras.layers.MaxPooling2D(pool_size=pool_wind))

model.add(tf.keras.layers.Conv2D(32, conv_wind))

model.add(tf.keras.layers.Activation(activation_func_hidden_layers))

model.add(tf.keras.layers.MaxPooling2D(pool_size=pool_wind))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(64))

model.add(tf.keras.layers.Activation(activation_func_hidden_layers))

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(num_of_classes))

model.add(tf.keras.layers.Activation(activation_func_output_layer))

model.compile(loss='categorical_crossentropy', optimizer='rmsprop', metrics=['accuracy'])

return model Approach

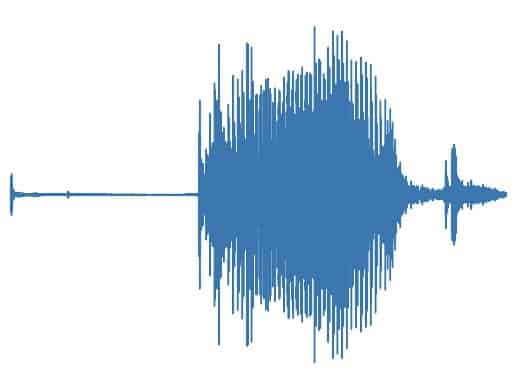

Now, we need to pre-process the audio files in order to train the model on them. In order to do this we need to convert them to images. The question is – How can you convert audio files to images? And the answer is – spectrograms.

Spectrograms

Defined by Wikipedia, a spectrogram is a visual representation of the spectrum of frequencies of a signal as it varies with time. When applied to an audio signal, spectrograms are sometimes called sonographs, voiceprints, or voicegrams. When the data is represented in a 3D plot they may be called waterfalls.

Once we have each audio file represented as an image, the task becomes very similar.

Now, let’s see a code example of converting audio to spectrogram:

from PIL import Image

from scipy import signal

from scipy.io import wavfile

def _get_single_spectrogram_img(wav_file_path, img_file_path):

"""

Receives a path to a .wav file, and save the spectrograms to the given path.

"""

sample_rate, samples = wavfile.read(wav_file_path)

frequencies, times, spectrogram = signal.spectrogram(samples, sample_rate)

img = Image.fromarray(spectrogram, 'RGB')

img.save(img_file_path)

Here is a “Wave” of the word Dog:

Training the model

After converting all the audio files to images, we can let the convolutional neural network train on the images as we would have done for any image classification problem.

Voila app:

- Audio samples, listen and predict

- Images: select and predict