Maximize GPU Utilization

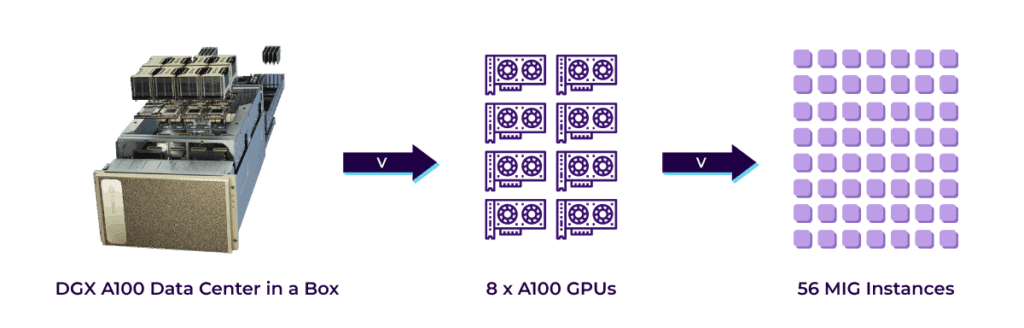

- Achieve up to 7X more GPU instances on a single NVIDIA A100 GPU and up to 56X more on the 8 A100 GPUs in NVIDIA DGX A100

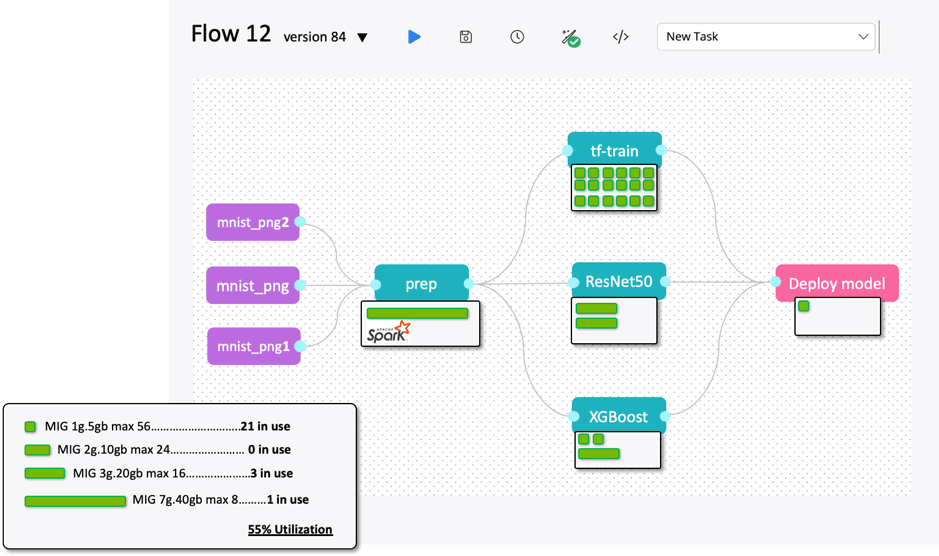

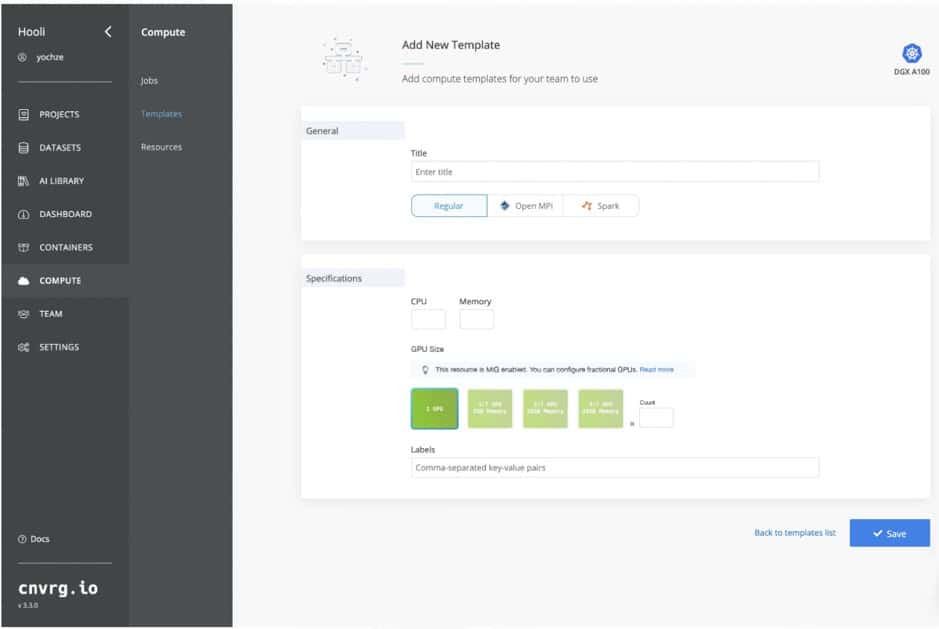

- Allocate right-sized GPU with guaranteed quality of service (QoS) for every job

- Enable inference, training, and HPC workloads to run at the same time on a single GPU

- Automate MIG pool management and make instances available immediately after job is completed