Table of Contents

Introduction to Spiking Neural Networks

Nowadays, Deep Learning (DL) is a hot topic within the Data Science community. Despite being quite effective in various tasks across the industries Deep Learning is constantly evolving proposing new neural network (NN) architectures, DL tasks, and even brand new concepts of the next generation of NNs, for example, Spiking Neural Network (SNN).

In this article we will talk about:

- What is a Spiking Neural Network?

- How does a Spiking Neural Network work?

- The key concept of SNN operation

- SNN neuron models

- SNN architectures

- How to train a SNN?

- Deep Learning in SNNs

- Advantages and disadvantages of Spiking Neural Networks

- Challenges of Spiking Neural Networks

- How to build a Spiking Neural Network?

- Tensorflow

- SpykeTorch

- Spiking Neural Networks Real-Life Applications

- Future of Spiking Neural Networks

Let’s jump in.

What is a Spiking Neural Network?

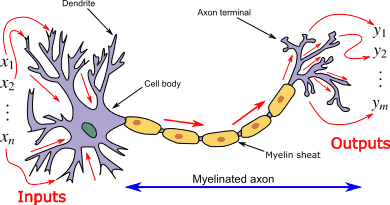

As you might know, the general idea of an artificial neural network (ANN) comes from biology. Each one of us has a biological neural network inside our brain that is used for information and signal processing, decision making, and many other things.

The base element of a biological neural network is a biological neuron.

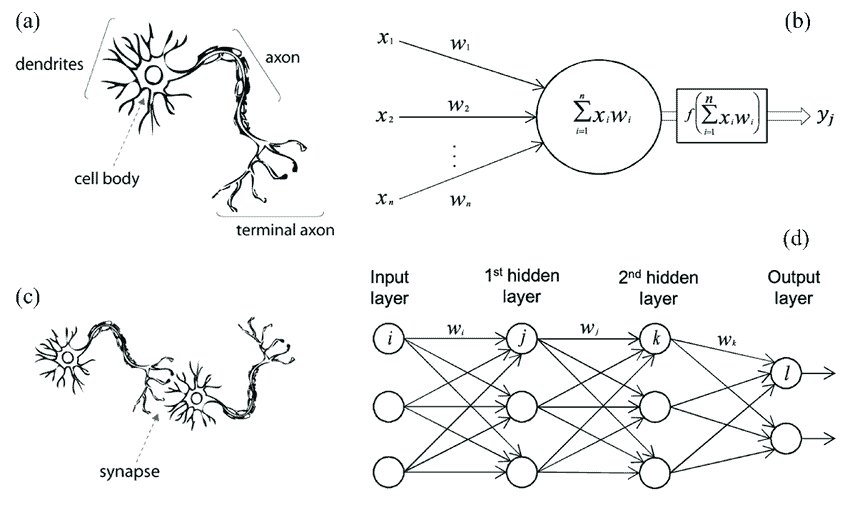

Source: Wikipedia

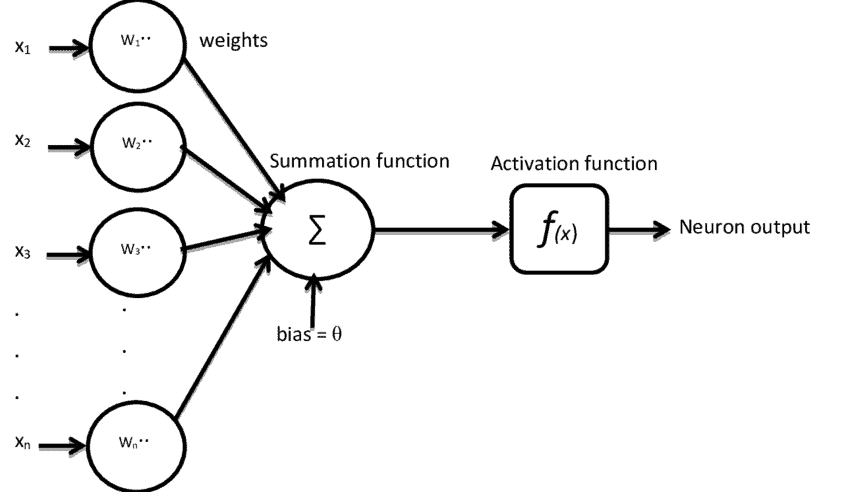

Whereas, in artificial neural networks, artificial neurons are used.

Image source: Artificial neuron

Despite being quite similar between each other artificial neurons do not actually mimic the behaviour of the biological ones. Thus, biological and artificial NNs are fundamentally different in:

- General structure

- Neural computations

- Learning rule compared to the brain

Image source: ResearchGate (a) biological neuron; (b) artificial neuron; (c) biological synapse; and (d) ANN synapses

This is when the idea of a Spiking Neural Network pops up. Let’s create a NN that will use biologically realistic neurons instead of artificial ones. Such a network will be an SNN.

The first scientific model of a Spiking Neural Network was proposed by Alan Hodgkin and Andrew Huxley in 1952. The model described biological neurons’ action potentials initialization and propagation. However, impulses between biological neurons are not transmitted directly. Such communication requires the exchange of chemicals called neurotransmitters in the synaptic gap.

How does a Spiking Neural Network work?

The key concept of SNN operation

The key difference between a traditional ANN and SNN is the information propagation approach.

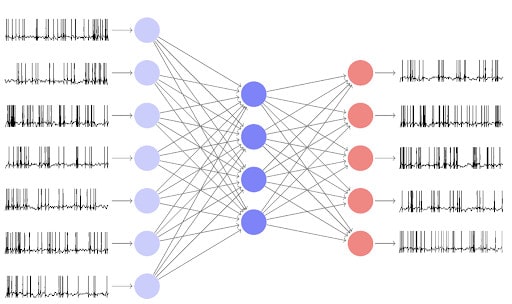

SNN tries to more closely mimic a biological neural network. This is why instead of working with continuously changing in time values used in ANN, SNN operates with discrete events that occur at certain points of time. SNN receives a series of spikes as input and produces a series of spikes as the output (a series of spikes is usually referred to as spike trains).

The general idea is as follows:

- At every moment of time each neuron has some value that is analogous to the electrical potential of biological neurons;

- The value in a neuron can change based on the mathematical model of a neuron, for example, if a neuron receives a spike from the upstream neuron, the value might increase or decrease;

- If the value in a neuron exceeds some threshold, the neuron will send a single impulse to each downstream neuron connected to the initial one;

- After this, the value of the neuron will instantly drop below its average. Thus, the neuron will experience the analog of a biological neuron’s refractory period. Over time the value of the neuron will smoothly return to its average.

SNN neuron models

SNN neurons are actually built on the mathematical descriptions of biological neurons. There are two basic groups of methods used to model an SNN neuron.

- Conductance-based models describe how action potentials in neurons are initiated and propagated

2. Threshold models generate an impulse at a certain threshold

Although all these methods try to describe biological neurons, the devil is in detail, so SNN neurons built based on these models might slightly differ.

The most commonly used model for an SNN neuron is the Leaky Integrate-and-fire threshold model. This model suggests setting the value in the neuron to the momentary activation level modeled as a differential equation. Then the neuron receives incoming spikes that affect the value until it either vanishes or reaches a threshold. If the threshold is reached, the neuron sends impulses to the downstream neurons and the value in the neuron drops below its average.

SNN architectures

Despite an SNN being unique at its concept, it is still a neural network, so SNN architectures can be divided into three groups:

- Feedforward Neural Network is a classical NN architecture that is widely used across all industries. In such an architecture the data is transmitted strictly in one direction – from inputs to outputs, there are no cycles, and processing can take place over many hidden layers. The majority of the modern ANN architectures are feedforward;

- Recurrent Neural Network (RNN) is a bit more advanced architecture. In RNNs connections between neurons form a directed graph along a temporal sequence. This allows the net to exhibit temporal dynamic behavior. If an SNN is Recurrent, it will be dynamical and have a high computational power;

- In Hybrid Neural Network some neurons will have feedforward connection whereas others will have recurrent connection. Moreover, connections between these groups might also be either feedforward or recurrent. There are two types of Hybrid Neural Networks that can be used as SNN architecture:

- Synfire chain is a multilayer net that allows impulse activity to propagate in the form of a synchronous wave of transmission of spike trains from one layer to the other and back;

- Reservoir computing can be used to build a Reservoir SNN that will have a Recurrent Reservoir and output neurons.

How to train a SNN?

Unfortunately, as of today, there is no effective supervised interpretable learning method that can be used to train an SNN. The key concept of SNN operations does not allow the use of classical learning methods that are appropriate for the rest of NNs. Still, scientists are searching for an optimal method.This is the reason why training an SNN might be a tough task.

Nevertheless, you can apply the following methods to SNNs training:

- Unsupervised Learning

- Supervised Learning

- Reinforcement Learning

As you might have noticed, the majority of learning methods mentioned above are rather related to biology than machine learning. Unfortunately, if you want to work with SNNs you must be ready to study the biological aspects of the topic because they are the key ones in SNNs. Anyway, if you were able to train an SNN the next logical step is to share your experience with the community to help develop the sphere. SNNs are actively developing, so any experience will be relevant.

Deep Learning in SNNs

As you might know, the more layers you have in your neural network, the deeper it is considered to be. So, in theory, you can stack multiple hidden layers in your SNN and consider it a deep Spiking Neural Network. However, as of today, the performance of directly trained Spiking Deep Neural Networks are not as good as traditional Deep Neural Networks represented in the literature. So, developing a Deep SNN with good performance comparable with traditional deep learning methods is a challenging task that is yet to be solved.

Advantages and disadvantages of Spiking Neural Networks

Spiking Neural Networks have several clear advantages over the traditional NNs:

- SNN is dynamic. Thus, it excels at working with dynamic processes such as speech and dynamic image recognition;

- An SNN can still train when it is already working;

- You need to train only the output neurons to train an SNN;

- SNNs usually have fewer neurons than the traditional ANNs;

- SNNs can work very fast since the neurons will send impulses not a continuous value;

- SNNs have increased productivity of information processing and noise immunity since they use the temporal presentation of information.

Unfortunately, SNNs also have two major disadvantages:

- SNNs are hard to train. As of today, there is no learning method designed specifically for this task;

- It is impractical to build a small SNN.

Challenges of Spiking Neural Networks

In theory, SNNs are more powerful than the current generation of NNs. Still, there are two serious challenges that need to be solved before SNNs will be widely used:

- The first challenge comes from the lack of the learning method developed specifically for the SNN training. Specifics of SNN operations do not allow Data Scientists to effectively use traditional learning methods, for example, gradient descent. Sure, there are Unsupervised biological learning methods that can be used to train an SNN. However, it will be time-consuming and irrelevant since a traditional ANN will learn both faster and better;

- The second one is hardware. Working with SNNs is computationally expensive as it requires solving many differential equations. Thus, you will not be able to effectively work locally without having specialised hardware.

How to build a Spiking Neural Network?

Sure, working with SNNs is a challenging task. Still, there are some tools you might find interesting and useful:

- If you want a software that helps to simulate Spiking Neural Networks and is mainly used by biologists, you might want to check:

- If you want a software that can be used to solve not theoretical but real problems, you should check:

Anyway, if you simply want to touch the sphere you should probably use either TensorFlow or SpykeTorch. Still, please be aware that working with SNNs locally without specialized hardware is very computationally expensive.

Tensorflow

You can definitely create an SNN using Tensorflow, but because the deep learning framework was not initially created to work with SNNs you’ll have to write a lot of code yourself. Please check the related notebooks on the topic that feature the basic SNN simulation and will help you to get started:

SpykeTorch

SpykeTorch is a Python simulator of convolutional spiking neural networks from the PyTorch ecosystem. Hopefully, it was initially developed to work with SNNs, so you will be able to use a high-level API to do your task effectively.

Despite the incomplete documentation, the simulator has a great tutorial for a smooth start.

Spiking Neural Networks Real Life Applications

SNNs can be applied in various industries, for example:

- Prosthetics: As of today, there are already visual and auditory neuroprostheses, which use spike trains to send signals to the visual cortex and return the ability to orient in space to the patients. Also, scientists are working on mechanical motor prostheses that use the same approach. Moreover, spike trains can be supplied to the brain through implanted electrodes and, thereby, eliminate the symptoms of Parkinson’s disease, dystonia, chronic pain, and schizophrenia.

- Robotics: Brain Corporation based in San Diego develops robots using SNNs, whereas SyNAPSE develops neuromorphic systems and processors.

- Computer Vision: Computer Vision is the sphere that can strongly benefit from using SNNs for automatic video analysis. The IBM TrueNorth digital neurochip can help with that as it includes one million programmable neurons and 256 million programmable synapses to simulate the functioning of neurons in the visual cortex. This neurochip is often considered the first hardware tool that was specifically designed to work with SNNs.

- Telecommunications: Qualcomm is actively researching the possibility of integrating SNNs in telecommunication devices.

Future of Spiking Neural Networks

There are two opinions on SNNs among Data Scientists: a skeptical and an optimistic one.

Optimists think that SNNs are the future, because:

- They are the logical step in NNs evolution;

- In theory, they are more powerful that traditional ANNs;

- There are already SNNs implementations that show the potential of SNNs.

On the other hand, skeptics feel that SNNs are overrated for several reasons:

- There is no learning method designed specifically for SNNs;

- Effective working with SNNs requires specialized hardware;

- They are not commonly used across the industries remaining either a niche solution or a fancy idea;

- SNNs are less interpretable than ANNs;

- There are more theoretical articles on SNNs than the practical ones;

- Despite being around for a while, there is still no massive breakthrough in the SNNs sphere.

Thus, the future of SNNs is unclear. From my perspective, SNNs simply need a bit more time and research before they become relevant.

Final Thoughts

To summarize, we started with the basic concept behind the Spiking Neural Networks, talked about the key concept of SNN operations, covered possible neuron models for SNN and SNNs architectures. Also, we talked about learning methods that might help to train an SNN, mentioned the advantages, disadvantages, and challenges of working with SNNs. Lastly, we reviewed code implementation of Spiking Neural Network, real-life applications, and gave some thoughts on the future of SNNs.

If you enjoyed this post, a great next step would be to start exploring the field, trying to learn as much as possible about Spiking Neural Networks. Check out a few must-read papers on the topic:

- Deep Learning in Spiking Neural Networks

- FPGA Implementation of Simplified Spiking Neural Network

- Efficient Spiking Neural Networks with Radix Encoding

- Visual Explanations from Spiking Neural Networks using Interspike Intervals

Thanks for reading, and happy training!

Resources

- SpykeTorch: Efficient Simulation of Convolutional Spiking Neural Networks with at most one Spike per Neuron

- Training Deep Spiking Neural Networks Using Backpropagation

- Qualcomm Zeroth is advancing deep learning in devices [VIDEO]

- Biological neuron model

- The differences between Artificial and Biological Neural Networks

- Spiking Neural Networks, the Next Generation of Machine Learning